Processing Custom IIS server logs with Fluent Bit, Wasm, and Rust

Processing Custom IIS server logs with Fluent Bit, Wasm, and Rust

Fluent Bit is a fast, lightweight, and highly scalable log, metric, and trace processor and forwarder. It is a Cloud Native Computing Foundation graduated open-source project with an Apache 2.0 license.

Fluent Bit uses a pluggable architecture, enabling new data sources and destinations, processing filters, and other new features to be added with approved plugins. Although there are dozens of supported plugins, there may be times when no out-of-the-box plugin accomplishes the exact task you need.

Thankfully, Fluent Bit lets developers write custom scripts using Lua or WebAssembly for such instances.

WebAssembly (abbreviated Wasm) is a binary instruction format for a stack-based virtual machine. Wasm is designed as a portable compilation target for programming languages, enabling deployment on the web for client and server applications. Developer reference documentation for Wasm can be found on MDN's WebAssembly pages.

This post covers how Wasm can be used with Fluent Bit to implement custom logic and functionalities.

To achieve the desired outcomes, several tasks need to be addressed. Firstly, data validation should be implemented to ensure the accuracy and integrity of the processed information. Additionally, we should perform type conversion to ensure compatibility and consistency across different data formats.

Moreover, integrating external resources such as APIs or databases can enhance the logs by providing additional relevant information. It is crucial to apply backward compatibility, maintainability, and testability principles to the source code to ensure its longevity and ease of future modifications.

Specifically, we’ll demonstrate how to collect and parse Internet Information Services (IIS) w3c logs (with some custom modifications) and transform the raw string into a standard Fluent Bit structured JSON record.

Afterward, we will demonstrate the process of inserting this JSON structured record into ClickHouse to store the logs, enabling the application of queries to extract metrics and relevant data.

Grafana can be utilized to visualize and analyze the metrics and relevant data extracted from ClickHouse. By connecting Grafana to ClickHouse as a data source, users can create interactive dashboards and visualizations to gain insights and monitor the logs effectively. Grafana's rich features and customizable options allow for creating dynamic and informative visual representations of the data stored in ClickHouse.

What you’ll need to get started:

Windows server with IIS (server) enabled and the w3c log format enabled (for dev or production environments).

Docker: Using docker-compose, we will deploy Fluent Bit, ClickHouse, and Grafana in a docker desktop.

Rustlang 1.62.1 (e092d0b6b 2022-07-16) or later: Wasm plugins will be written using Rustlang.

Rust target wasm32-unknown-unknown: For building Wasm programs.

Familiarity with Fluent Bit concepts: Inputs, outputs, parsers, and filters. If you’re unfamiliar with these concepts, please refer to the official documentation.

Understanding the use case

Organizations today need to be able to collect and parse logs generated by IIS (Internet Information Services). In this particular use case, we will explore the significance of utilizing the Fluent Bit WebAssembly (Wasm) plugin to create custom modifications for logs collected in the w3c format.

By leveraging the Fluent Bit Wasm plugin, organizations can enhance their log processing capabilities by implementing tailored transformations and enrichments specific to their requirements. This ability empowers them to extract valuable insights and gain a deeper understanding of their IIS logs, enabling more effective troubleshooting, monitoring, and analysis of their web server infrastructure.

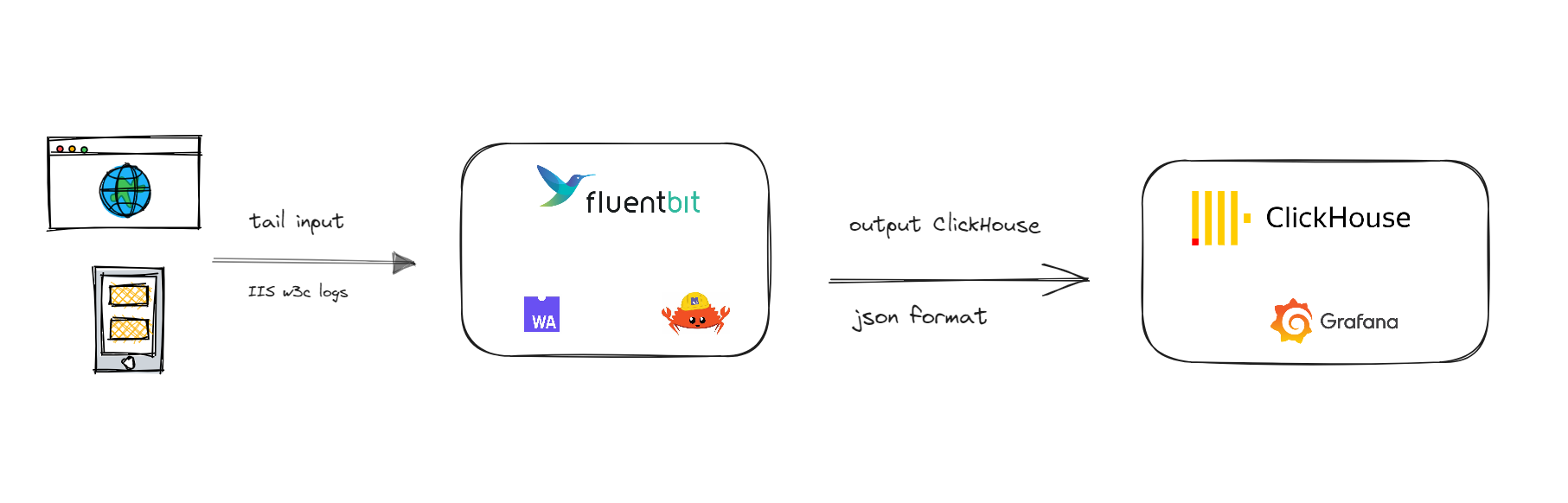

The following diagram provides an overview of the actions we will take:

This diagram highlights an interesting aspect, namely the introduction of WebAssembly in Fluent Bit. In previous versions of Fluent Bit, the workflow for this use case was relatively straightforward. Log information was extracted using parsers that relied on regular expressions or Lua code.

However, with the integration of Wasm, Fluent Bit now offers a more versatile and powerful approach to log extraction and processing. Wasm enables the implementation of custom modifications and transformations, allowing for greater flexibility and efficiency in handling log data. This advancement in Fluent Bit's capabilities opens up new possibilities for extracting and manipulating log information, ultimately enhancing the overall log processing workflow.

Currently, Fluent Bit offers an ecosystem of plugins, filters, and robust parsers through which you can perform pipelines and routing of different workflows.

It is possible to create parsers using regular expressions and components using programming languages such as C, Golang, and Rust using Wasm.

Our use case shows how to use Rust to develop a Wasm plugin.

Note: One of the reasons for using Rust as a programming language is that I previously developed a PoC project to learn Rust. The idea was to create a Fluent Bit-inspired log collector for IIS files, parse the logs, and send them to various destinations (Kafka, Loki, Postgres). Having that code base turned out to be interesting for combining existing logic with the proposal offered by Fluent Bit to integrate Rust with Wasm into its ecosystem.

Configure the IIS log output standard:

Enable the option to generate the logs in the W3C format of the IIS server.

Configure and set the fields to be measured.

By default, IIS w3c logs include fields that may not always provide relevant information for defining usage metrics and access patterns. Additionally, these logs may not cover custom fields specific to our use case.

One example is the c-authorization-header field, which is essential for our analysis but not included in the default log format. Therefore, it becomes necessary to customize the log configuration to include this field and any other relevant custom fields crucial to our specific requirements.

This customization ensures we can access all the necessary information to accurately define metrics and gain insights into our IIS server's usage and access patterns.

date time s-sitename s-computername s-ip cs-method cs-uri-stem cs-uri-query s-port c-ip cs(User-Agent) cs(Cookie) cs(Referer) cs-host sc-status sc-bytes cs-bytes time-taken c-authorization-header.Writing the Wasm Program

To get started, we need to create a new project to construct the filter. Following the official documentation, run this command in our terminal:

cargo new flb_filster_plugin –libThe command cargo new flb_filter_plugin --lib is used in the Rust programming language to create a new project. The "--lib" flag specifies that the project should be created as a library project, which is suitable for developing Fluent Bit filter plugins.

Next, open the Cargo.toml file and add the following section:

[lib]

crate-type = ["cdylib"]

[dependencies]

serde = { version = "1.0.160", features = ["derive"] }

serde_json = "1.0.104"

serde_bytes = "0.11"

rmp-serde = "1.1"

regex = "1.9.2"

chrono = "0.4.24"

libc = "0.2"Next, open up src/lib.rs and overwrite it with the following entry point code. We will explain the code in the following section.

#[no_mangle]

pub extern "C" fn flb_filter_log_iis_w3c_custom(

tag: *const c_char,

tag_len: u32,

time_sec: u32,

time_nsec: u32,

record: *const c_char,

record_len: u32,

) -> *const u8 {

let slice_tag: &[u8] = unsafe { slice::from_raw_parts(tag as *const u8, tag_len as usize) };

let slice_record: &[u8] =

unsafe { slice::from_raw_parts(record as *const u8, record_len as usize) };

let mut vt: Vec<u8> = Vec::new();

vt.write(slice_tag).expect("Unable to write");

let vtag = str::from_utf8(&vt).unwrap();

let v: Value = serde_json::from_slice(slice_record).unwrap();

let dt = Utc.timestamp_opt(time_sec as i64, time_nsec).unwrap();

let time = dt.format("%Y-%m-%dT%H:%M:%S.%9f %z").to_string();

let input_logs = v["log"].as_str().unwrap();

let mut buf=String::new();

if let Some(el) = LogEntryIIS::parse_log_iis_w3c_parser(input_logs) {

let log_parsered = json!({

"date": el.date_time,

"s_sitename": el.s_sitename,

"s_computername": el.s_computername,

"s_ip": el.s_ip,

"cs_method": el.cs_method,

"cs_uri_stem": el.cs_uri_stem,

"cs_uri_query": el.cs_uri_query,

"s_port": el.s_port,

"c_ip": el.c_ip,

"cs_user_agent": el.cs_user_agent,

"cs_cookie": el.cs_cookie,

"cs_referer": el.cs_referer,

"cs_host": el.cs_host,

"sc_status": el.sc_status,

"sc_bytes": el.sc_bytes.parse::<i32>().unwrap(),

"cs_bytes": el.cs_bytes.parse::<i32>().unwrap(),

"time_taken": el.time_taken.parse::<i32>().unwrap(),

"c_authorization_header": el.c_authorization_header,

"tag": vtag,

"source": "LogEntryIIS",

"timestamp": format!("{}", time)

});

let message = json!({

"log": log_parsered,

"s_sitename": el.s_sitename,

"s_computername": el.s_computername,

"cs_host": el.cs_host,

"date": el.date_time,

});

buf= message.to_string();

}

buf.as_ptr()

}Program Explanation

This Rust code defines a function called flb_filter_log_iis_w3c_custom, which is intended to be used as a filter plugin in Fluent Bit with the WebAssembly module.

let slice_tag: &[u8] = unsafe { slice::from_raw_parts(tag as *const u8, tag_len as usize) };

let slice_record: &[u8] =

unsafe { slice::from_raw_parts(record as *const u8, record_len as usize) };

let mut vt: Vec<u8> = Vec::new();

vt.write(slice_tag).expect("Unable to write");

let vtag = str::from_utf8(&vt).unwrap();

let v: Value = serde_json::from_slice(slice_record).unwrap();

let dt = Utc.timestamp_opt(time_sec as i64, time_nsec).unwrap();

let time = dt.format("%Y-%m-%dT%H:%M:%S.%9f %z").to_string();The function takes several parameters: tag, tag_len, time_sec, time_nsec, record, and record_len. These parameters represent the tag, timestamp, and log record information passed from Fluent Bit.

The code then converts the received parameters into Rust slices (&[u8]) to work with the data. It creates a mutable vector (Vec<u8>) called vt and writes the tag data into it. The vtag variable is created by converting the vt vector into a UTF-8 string.

Next, the code deserializes the record data into a serde_json::Value object called v.

The incoming structured logs are:

{"log": "2023-08-11 19:56:44 W3SVC1 WIN-PC1 ::1 GET / - 80 ::1 Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/115.0.0.0+Safari/537.36+Edg/115.0.1901.200 - - localhost 304 142 756 1078 -"}It also converts the time_sec and time_nsec values into a DateTime object using the Utc.timestamp_opt function.

The code then extracts specific fields from the v object and assigns them to variables. These fields represent various properties of an IIS log entry, such as date, site name, computer name, IP address, HTTP method, URI, status codes, and more.

let input_logs = v["log"].as_str().unwrap();

let mut buf=String::new();

if let Some(el) = LogEntryIIS::parse_log_iis_w3c_parser(input_logs) {

let log_parsered = json!({

"date": el.date_time,

"s_sitename": el.s_sitename,

"s_computername": el.s_computername,

"s_ip": el.s_ip,

"cs_method": el.cs_method,

"cs_uri_stem": el.cs_uri_stem,

"cs_uri_query": el.cs_uri_query,

"s_port": el.s_port,

"c_ip": el.c_ip,

"cs_user_agent": el.cs_user_agent,

"cs_cookie": el.cs_cookie,

"cs_referer": el.cs_referer,

"cs_host": el.cs_host,

"sc_status": el.sc_status,

"sc_bytes": el.sc_bytes.parse::<i32>().unwrap(),

"cs_bytes": el.cs_bytes.parse::<i32>().unwrap(),

"time_taken": el.time_taken.parse::<i32>().unwrap(),

"c_authorization_header": el.c_authorization_header,

"tag": vtag,

"source": "LogEntryIIS",

"timestamp": format!("{}", time)

});

let message = json!({

"log": log_parsered,

"s_sitename": el.s_sitename,

"s_computername": el.s_computername,

"cs_host": el.cs_host,

"date": el.date_time,

});

buf= message.to_string();

}

buf.as_ptr()If the log entry can be successfully parsed using the LogEntryIIS::parse_log_iis_w3c_parser function, the code constructs a new JSON object representing the parsed log entry. It includes additional fields like the tag, source, and timestamp. The log entry and some specific fields are also included in a separate JSON object called message.

Finally, the code converts the message object to a string and assigns it to the buf variable. The function returns a pointer to the buf string, which will be used by Fluent Bit.

In summary, this code defines a custom filter plugin for Fluent Bit that processes IIS w3c log records, extracts specific fields, and constructs new JSON objects representing the parsed log entries.

The rest of the code is hosted at https://github.com/kenriortega/flb_filter_iis.git. It is an open-source project and currently provides two functions focused on the current need: parsing and processing a specific format. However, it is subject to new proposals and ideas to grow the project as a suite of possible use cases.

Instructions for Compiling the Wasm Program

To compile this plugin, we suggest consulting the official Fluent Bit documentation for instructions to perform this process from your local environment and requirements for installing the Rust toolchain Wasm.

$ cargo build --target wasm32-unknown-unknown --release

$ ls target/wasm32-unknown-unknown/release/*.wasm

target/wasm32-unknown-unknown/release/filter_rust.wasmIn case you want to use the plugin from the repository, there is a release section where it is automatically compiled using GitHub actions.

Configuring Fluent Bit To Use Wasm Plugin

To reproduce the demo, a docker-compose.yaml file is attached within the repository, displaying the necessary resources for the below steps.

version: '3.8'

volumes:

clickhouse:

services:

clickhouse:

container_name: clickhouse

image: bitnami/clickhouse:latest

environment:

- ALLOW_EMPTY_PASSWORD=no

- CLICKHOUSE_ADMIN_PASSWORD=default

ports:

- 8123:8123

fluent-bit:

image: cr.fluentbit.io/fluent/fluent-bit

container_name: fluent-bit

ports:

- 8888:8888

- 2020:2020

volumes:

- ./docker/conf/fluent-bit.conf:/fluent-bit/etc/fluent-bit.conf

- ./target/wasm32-unknown-unknown/release/flb_filter_iis_wasm.wasm:/plugins/flb_filter_iis_wasm.wasm

- ./docker/dataset\:/dataset/

grafana:

image: grafana/grafana:latest

environment:

- GF_PATHS_PROVISIONING=/etc/grafana/provisioning

- GF_AUTH_ANONYMOUS_ENABLED=false

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

depends_on:

- clickhouse

ports:

- "3000:3000"We next configure Fluent Bit to process the logs collected from IIS. To make this tutorial more practical, we will use the dummy input plugin to generate sample logs. We provide several inputs to simulate the GET, POST, and status code 200, 401, 404, and 500 methods.

[INPUT]

Name dummy

Dummy {"log": "2023-07-20 17:18:54 W3SVC279 WIN-PC1 192.168.1.104 GET /api/Site/site-data qName=quww 13334 10.0.0.0 Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/114.0.0.0+Safari/537.36+Edg/114.0.1823.82 _ga=GA2.3.499592451.1685996504;+_gid=GA2.3.1209215542.1689808850;+_ga_PC23235C8Y=GS2.3.1689811012.8.0.1689811012.0.0.0 http://192.168.1.104:13334/swagger/index.html 192.168.1.104:13334 200 456 1082 3131 Bearer+token"}

Tag log.iis.*

[INPUT]

Name dummy

Dummy {"log": "2023-08-11 19:56:44 W3SVC1 WIN-PC1 ::1 GET / - 80 ::1 Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/115.0.0.0+Safari/537.36+Edg/115.0.1901.200 - - localhost 404 142 756 1078 -"}

Tag log.iis.get

[INPUT]

Name dummy

Dummy {"log": "2023-08-11 19:56:44 W3SVC1 WIN-PC1 ::1 POST / - 80 ::1 Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/115.0.0.0+Safari/537.36+Edg/115.0.1901.200 - - localhost 200 142 756 1078 -"}

Tag log.iis.post

[INPUT]

Name dummy

Dummy {"log": "2023-08-11 19:56:44 W3SVC1 WIN-PC1 ::1 POST/ - 80 ::1 Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/115.0.0.0+Safari/537.36+Edg/115.0.1901.200 - - localhost 401 142 756 1078 -"}

Tag log.iis.post

[FILTER]

Name wasm

match log.iis.*

WASM_Path /plugins/flb_filter_iis_wasm.wasm

Function_Name flb_filter_log_iis_w3c_custom

accessible_paths .This Fluent Bit filter configuration specifies the usage of a WebAssembly filter plugin to process log records that match the pattern log.iis.*.

The param Name with value wasm specifies the name of the filter plugin, which in this case is "wasm".

The param WASM_Path specifies the path to the WebAssembly module file that contains the filter plugin implementation.

The param Function_Name: Specifies the name of the function within the WebAssembly module that will be used as a filter implementation.

The stdout output is used to check and visualize in the terminal the output result after filter processing.

[OUTPUT]

name stdout

match log.iis.*The result is as follows:

2023-10-21 09:36:33 [0] log.iis.post: [[1697906192.407803136, {}], {"cs_host"=>"localhost", "date"=>"2023-08-11 19:56:44", "log"=>{"c_authorization_header"=>"-", "c_ip"=>"::1", "cs_bytes"=>756, "cs_cookie"=>"-", "cs_host"=>"localhost", "cs_method"=>"POST", "cs_referer"=>"-", "cs_uri_query"=>"-", "cs_uri_stem"=>"/", "cs_user_agent"=>"Mozilla/5.0+(Windows+NT+10.0;+Win64;+x64)+AppleWebKit/537.36+(KHTML,+like+Gecko)+Chrome/115.0.0.0+Safari/537.36+Edg/115.0.1901.200", "date"=>"2023-08-11 19:56:44", "s_computername"=>"WIN-PC1", "s_ip"=>"::1", "s_port"=>"80", "s_sitename"=>"W3SVC1", "sc_bytes"=>142, "sc_status"=>"200", "source"=>"LogEntryIIS", "tag"=>"log.iis.post", "time_taken"=>1078, "timestamp"=>"2023-10-21T16:36:32.407803136 +0000"}, "s_computername"=>"WIN-PC1", "s_sitename"=>"W3SVC1"}]The output is a log record that has been processed by Fluent Bit with the specified filter configuration. This transformation offers all the advantages of our code implementation, data validation, and type conversion.

# Should be optional.

[OUTPUT]

name http

tls off

match *

host clickhouse

port 8123

URI /?query=INSERT+INTO+fluentbit.iis+FORMAT+JSONEachRow

format json_stream

json_date_key timestamp

json_date_format epoch

http_user default

http_passwd defaultTo ingest these logs inside ClickHouse, we need to use the http output module. The http output plugin of Fluent Bit allows flushing records into an HTTP endpoint. The plugin issues a POST request with the data records in MessagePack (or JSON). The plugin supports dynamic tags, which allow sending data with different tags through the same input.

Please refer to the official documentation for more information on Fluent Bit’s HTTP output module.

Setting up the Database Output

The ClickHouse database must have the following configuration, which was taken from the article Sending Kubernetes logs To ClickHouse with Fluent Bit.

Following the next steps, we can continue with our use case. With the structured logs parsed by our filter, it is possible to perform queries that allow us to analyze the behavior of our websites and APIs hosted on IIS.

First, we need to create the database using your client of choice.

CREATE DATABASE fluentbit

SET allow_experimental_object_type = 1;

CREATE TABLE fluentbit.iis

(

log JSON,

s_sitename String,

s_computername String,

cs_host String,

date Datetime

)

Engine = MergeTree ORDER BY tuple(date,s_sitename,s_computername,cs_host)

TTL date + INTERVAL 3 MONTH DELETE;This query is written in ClickHouse syntax, and it creates a database named "fluentbit" and a table named "iis" within that database. Let's break down the query step by step:

`CREATE DATABASE fluentbit`: This statement creates a new database named "fluentbit" if it doesn't already exist.

`SET allow_experimental_object_type = 1;`: This command supports experimental object types in ClickHouse. It allows you to use certain experimental features that may not be entirely stable or supported.

`CREATE TABLE fluentbit.iis`: This statement creates a new "iis" table within the "fluentbit" database. The table will contain the following columns:

`log`: This column has the data type `JSON`, which means it can store data in JSON format.

`s_sitename`: This column has the data type `String` and stores the site name.

`s_computername`: This column has the data type `String` and stores the computer name.

`cs_host`: This column has the data type `String` and stores the host.

`date`: This column has the data type `Datetime` and stores the date and time.

`Engine = MergeTree ORDER BY tuple(date, s_sitename, s_computername, cs_host)`: This specifies the storage engine for the "iis" table as `MergeTree`. The `MergeTree` engine is a popular ClickHouse storage engine that efficiently handles time-series data. The `ORDER BY` clause specifies the primary sorting order of the table, which is based on the columns `date`, `s_sitename`, `s_computername`, and `cs_host`.

`TTL date + INTERVAL 3 MONTH DELETE;`: This sets a Time-to-Live (TTL) rule on the table. It means that ClickHouse will automatically delete rows from the table where the `date` column is older than three months. This process helps to manage the data and keep the table size under control.

We can check that our workflow is properly working by checking the data entry:

SET output_format_json_named_tuples_as_objects = 1;

SELECT log FROM fluentbit.iis

LIMIT 1000 FORMAT JSONEachRow;Now that we have confirmed that ClickHouse is successfully receiving data from Fluent Bit, we can perform queries that provide us with information about the performance and behavior of our sites.

For example, to get the average of time_taken, sc_bytes, cs_bytes

SELECT AVG(log.time_taken) FROM fluentbit.iis;Another example is grouping by IP. This query is an aggregation on the "fluentbit.iis" table:

SET output_format_json_named_tuples_as_objects = 1;

SELECT COUNT(*),c_ip FROM fluentbit.iis

GROUP BY log.c_ip as c_ip;`SELECT COUNT(*), c_ip`: This part of the query specifies the columns to select in the result. It retrieves two values: the count of rows (`COUNT(*)`) and the value of the `c_ip` column.

`FROM fluentbit.iis`: This indicates the table to select data from. In this case, it selects data from the "iis" table within the "fluentbit" database.

`GROUP BY log.c_ip as c_ip`: This clause groups the rows based on the values of the `log.c_ip` column and assigns an alias `c_ip` to the result. The `log.c_ip` represents the `c_ip` column within the `log` JSON field.

Commons queries

SELECT count(*)

FROM fluentbit.iis

WHERE log.sc_status LIKE '4%';These queries calculate the count of rows that meet a specific condition in the "fluentbit.iis" table:

`SELECT count(*)`: This part of the query specifies that we want to calculate the count of rows that match the given condition.

`FROM fluentbit.iis`: This part indicates the table we want to retrieve the data from. In this case, it is the "iis" table within the "fluentbit" database.

`WHERE log.sc_status LIKE '4%'`: This clause specifies the condition that must be satisfied for a row to be included in the count. The `LIKE` operator with the pattern '4%' matches any string that starts with '4'. This condition will match statuses starting with '4', which typically represent client errors in HTTP responses (e.g., 400 Bad Request, 404 Not Found).

These and many other queries regarding the collected logs can be performed according to our needs.

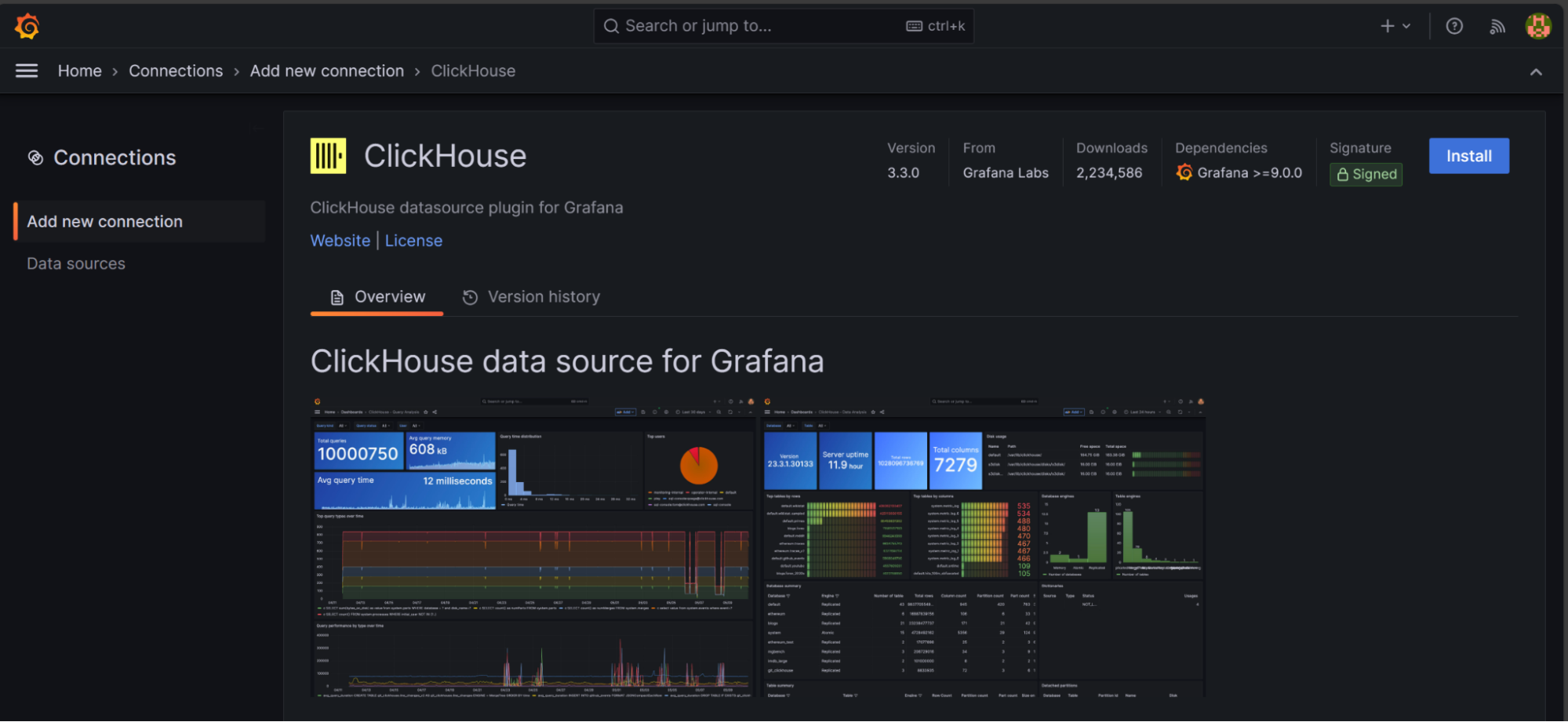

Visualizing Our Data with Grafana

Now that our records are stored in a database, we can use a visualization tool like Grafana for analysis rather than relying solely on pure SQL.

ClickHouse makes this process easy by offering a plugin for Grafana. The Grafana plugin allows users to connect directly to ClickHouse, enabling them to create interactive dashboards and visually explore their data.

With Grafana's intuitive interface and powerful visualization capabilities, users can gain valuable insights and make data-driven decisions more effectively. To learn more about connecting Grafana to ClickHouse, you can find detailed documentation and instructions on the official ClickHouse website: Connecting Grafana to ClickHouse.

Conclusion

The Fluent Bit Wasm filter approach provides us with several powerful advantages inherent to programming languages:

It can be extended by adding type conversion to fields such as sc_bytes, cs_bytes, time_taken. This is particularly useful when we need to validate our data results.

It allows conditions to apply more descriptive filters, for example, "get only all logs that contain status codes above 4xx or 5xx".

It can be used to define an allow/deny list utilizing a data structure array or a file to store predefined IP addresses.

It makes it possible to enhance our data by calling an external resource, such as an API or database.

It allows all methods to be thoroughly tested and shared as a binary bundle or library. Depending on our requirements, these examples can be applied in our demo and serve as an ideal starting point to create more complex logic.

Next Steps: Learn More

To learn more about Fluent Bit and its powerful data processing and routing capabilities, check out Fluent Bit Academy. There you'll find free, on-demand webinars and training sessions on a variety of Fluent Bit topics including:

You might also like

Statement on CVE-2024-4323 and its fix

We'd like to make sure you’re aware of a security vulnerability (known as CVE-2024-4323) that impacts Fluent Bit versions 2.0.7 through 3.0.3. The latest version of Fluent Bit, version 3.0.4, fixes this issue.