Leveraging Forward Protocol in Fluent for Log Forwarding

Leveraging Forward Protocol in Fluent for Log Forwarding

Every organization wants an observability solution to monitor their systems. Implementing it, however, is not an easy task, especially when dealing with large and complex systems. Organizations would require log collectors, processors, and forwarders that allow them to collect logs from various components and send them to monitoring tools. Such setups require specialized tools and protocols that can easily comprehend the input and route it to the right place.

One such protocol that we are going to discuss in today’s blog post is the forward protocol. We’ll understand the basics of what it is and how it works, we’ll also see how it allows us to send logs between Fluent Bit and FluentD.

What is Forward Protocol?

The forward protocol is a network protocol used by Fluentd to transport log messages between nodes. It is a binary protocol that is designed to be efficient and reliable. It uses TCP to transport messages and UDP for the heartbeat to check the status of servers.

It is a lightweight and efficient protocol that allows for the transmission of logs across different nodes or systems in real-time. The protocol also supports buffering and retransmission of messages in case of network failures, ensuring that log data is not lost.

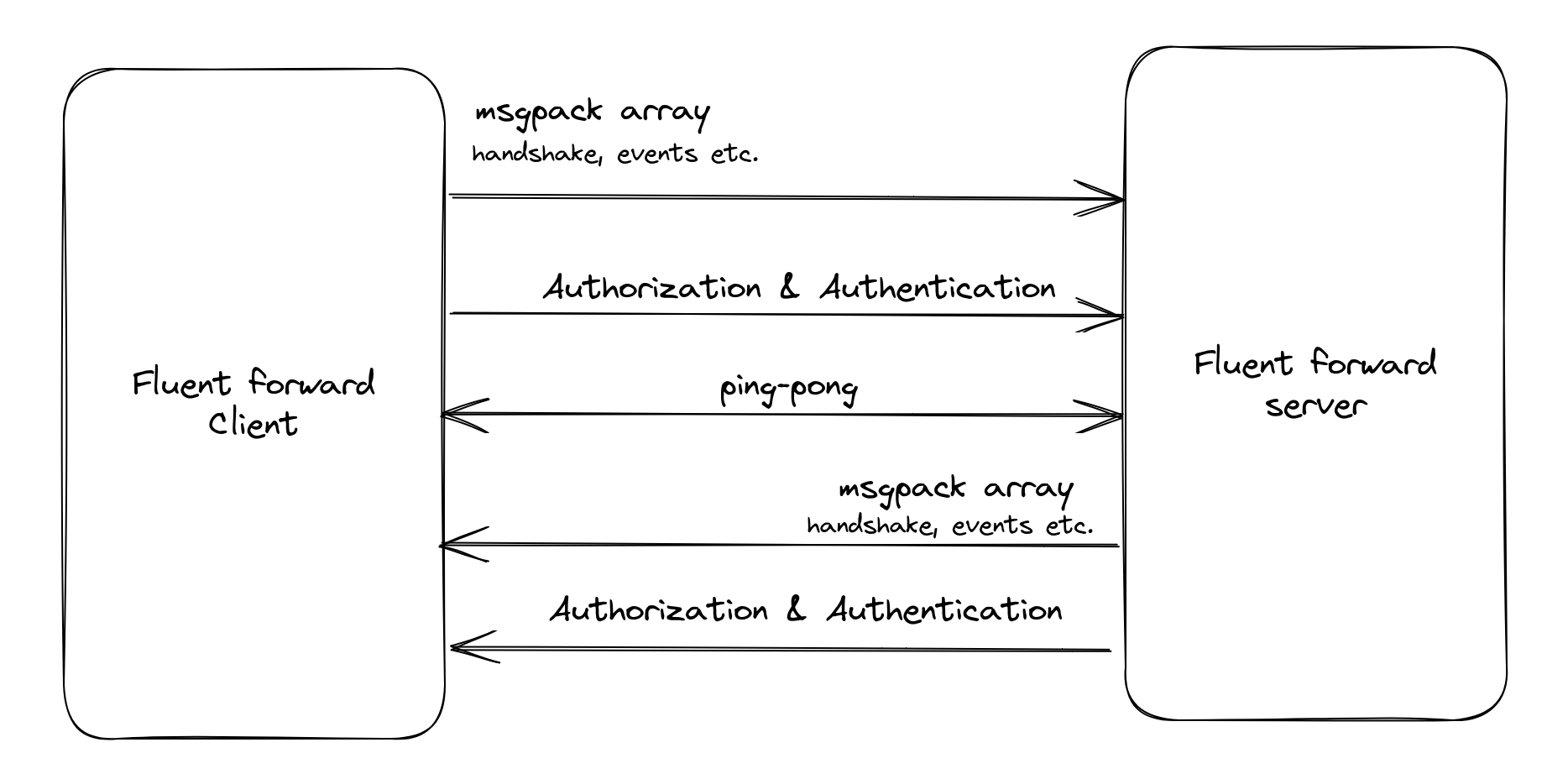

Fluentd Forward Client and Server are two components of the Fluentd logging system that work together to send and receive log data between different sources and destinations. It uses message pack arrays to communicate and also has options for authentication and authorization to ensure only authorized entities have access to send & receive logs. Read more about the forward protocol.

Apart from Fluentd and Fluent Bit, there’s also a Docker log driver that uses the forward protocol to send container logs to the Fluentd collector. We’ll however limit ourselves to Fluentd and Fluent Bit for the purpose of this blog post.

The forward protocol offers the following benefits:

It supports the compression of log data to reduce the amount of data sent over the network, which can improve performance, especially in situations where bandwidth is a limiting factor.

It includes a response mechanism whereby if any message fails to be delivered to the fluent forward server, it will be retried by the fluent forward client.

The protocol has specifications for backward compatibility that ensures older versions of Fluent Bit and Fluentd are able to communicate with each other and upgrades are smoother.

Understanding Fluent Bit and Fluentd

Both Fluentd and Fluent Bit are popular logging solutions in the cloud-native ecosystem. They are designed to handle high volumes of logs and provide reliable log collection and forwarding capabilities. Fluent Bit is lightweight and more suitable for edge computing and IoT use cases.

In this section, we’ll take a closer look at the differences between the two tools and understand a use case when you’d want to use both of them together.

| Fluentd | Fluent Bit | |

|---|---|---|

| Scope | Containers / Servers | Embedded Linux / Containers / Servers |

| Language | C & Ruby | C |

| Memory | > 60MB | ~1MB |

| Performance | Medium Performance | High Performance |

| Dependencies | Built as a Ruby Gem, it requires a certain number of gems. | Zero dependencies, unless some special plugin requires them. |

| Plugins | More than 1000 external plugins are available | More than 100 built-in plugins are available |

| License | Apache License v2.0 | Apache License v2.0 |

Both Fluent Bit and Fluentd can be used as forwarders or aggregators and can be used together or as a standalone solution. One use case for using Fluent Bit and Fluentd together is by using Fluent Bit to collect logs from containerized applications running in a Kubernetes cluster. This is because, Fluent Bit has a low footprint, where it can be deployed on every node. Meanwhile, Fluentd can be used for collecting logs from various sources outside of Kubernetes, such as servers, databases, and network devices.

Ultimately, the choice between Fluentd and Fluent Bit depends on the specific needs and requirements of the use case at hand.

In the next section let us understand how we can use the forward protocol to push data from Fluent Bit to Fluentd.

Using Forward Protocol With Fluent Bit and Fluentd

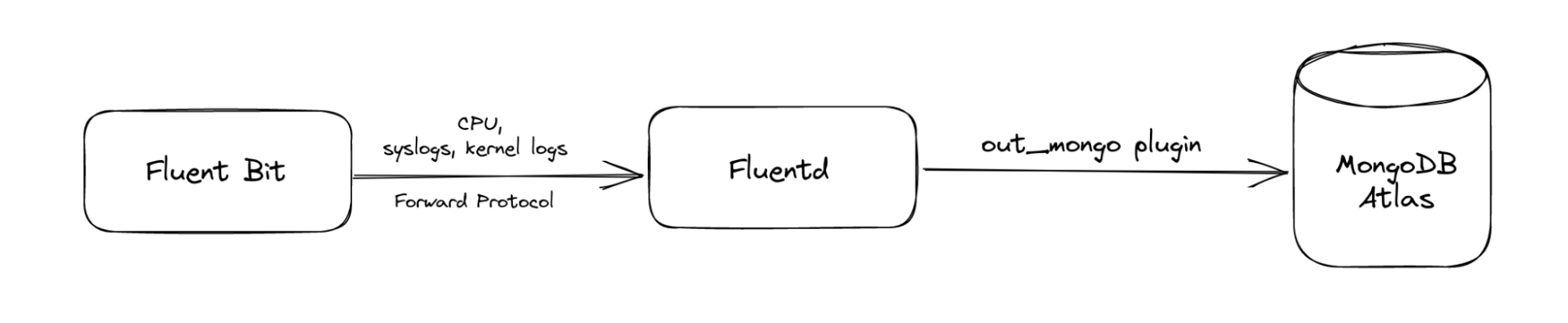

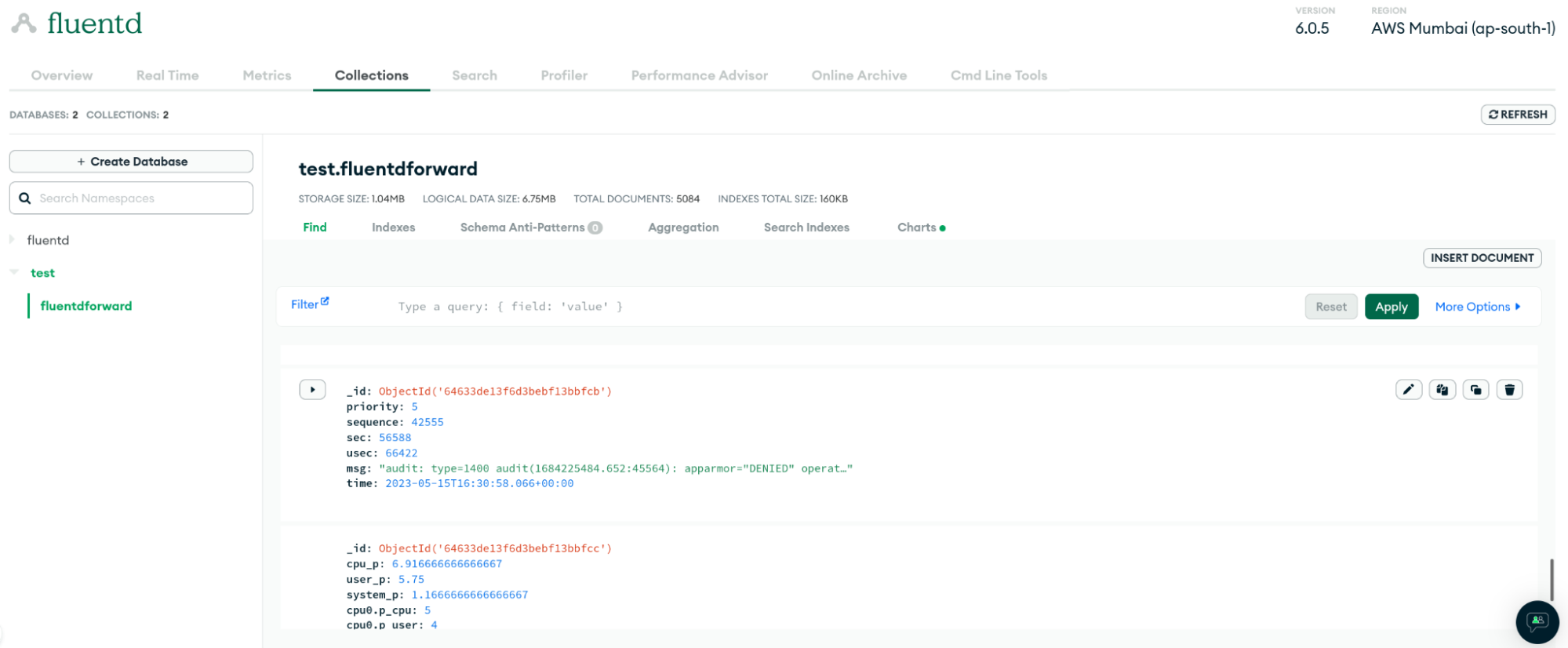

To understand how the forward protocol works, we’ll set up an instance of each Fluent Bit and Fluentd. We’ll collect CPU logs using Fluent Bit and using the forward protocol, we’ll send them to Fluentd. From there on we will push the logs to MongoDB Atlas.

MongoDB Atlas Configuration

MongoDB Atlas is a cloud-based database service that allows users to easily deploy, manage, and scale MongoDB databases. It offers features such as automatic backups, monitoring, and security, making it a convenient and reliable option for managing data in the cloud. Hence, we’ll be pushing our logs to MongoDB from Fluentd.

In order to do that, we need to do the following:

Create a new Database – by navigating to the collections tab.

Provide a database and collection name

Once it is created, click on the connect button and fetch the connection string

Apart from this, you might also have to do the following:

Create a new user and provide `Read and write to any database` permission.

Under the Network section, create a policy to allow connections from all IP addresses. Note: If you’re using this in production, please whitelist only those IPs that you’ll use to access the database

Fluentd Configuration

The first step is to configure Fluentd to receive input from a forward source. After you install Fluentd, you need to update the configuration file with the following:

<source>

type forward

bind 0.0.0.0

port 24224

</source>

<match fluent_bit>

type stdout

</match>

<match fluent_bit>

@type mongo

database fluentd

collection fluentdforward

connection_string "mongodb+srv://fluentduser:[email protected]/test?retryWrites=true&w=majority"

</match>In the above configuration, we are defining the source type to be forward and providing a bind address and port. We’re also providing a match filter which is `fluent_bit`, so any log it finds with this tag will be consumed. The input logs will be sent to MongoDB Atlas for which we’ve provided the database, collection, and connection_string.

After this, all you need to do is start the Fluentd service if it is not running already. It will not show any output at the moment since we have not yet configured Fluent Bit to forward the logs.

Fluent Bit Configuration

On the Fluent Bit side, we need to configure the INPUT and OUTPUT plugins.

INPUT

[INPUT]

Name cpu

Tag fluent_bit

[INPUT]

Name kmsg

Tag fluent_bit

[INPUT]

Name systemd

Tag fluent_bitWith this, we are collecting the CPU, kernel, and systemd logs and putting a `fluent_bit` tag to them

OUTPUT

[OUTPUT]

Name forward

Match *

Host 127.0.0.1

Port 24224For output, we’re using a forward output plugin which forwards the logs to the specified host and port.

Save the configuration and restart the Fluent Bit service. If everything is correct, you’ll see the logs being streamed by Fluentd. Navigate to your MongoDB Atlas UI and refresh the collection, you should be able to see the logs as shown below.

This way we are able to make use of the forward plugin and share logs between Fluent Bit and FluentD. You can use the forward protocol with other products that support it to gather logs from different sources and push them to different tools.

Summary

Monitoring is an essential part of maintaining a healthy system and ensuring that it is performing as expected. By using a logging solution such as Fluentd or Fluent Bit, organizations can gain insights into their systems and troubleshoot issues quickly.

Forward protocol provides a seamless way for Fluent Bit to send log data to Fluentd for further processing and storage. Whether it’s Fluentd’s full-featured and mature log collection capabilities or Fluent Bit’s lightweight and efficient log collection ideal for edge and IoT devices, there’s a solution for every organization’s need.

Moreover, you can use this forward protocol with other products that support it and make use of a tool like Calyptia Core that can help you build an efficient pipeline to ingest and forward data to a backend of your choice. It’s a low-code UI that allows you to configure, manage and monitor your data pipeline. Learn more about Calyptia Core and see how you can use it to achieve better observability.

You might also like

Statement on CVE-2024-4323 and its fix

We'd like to make sure you’re aware of a security vulnerability (known as CVE-2024-4323) that impacts Fluent Bit versions 2.0.7 through 3.0.3. The latest version of Fluent Bit, version 3.0.4, fixes this issue.